If you’ve earned your living in the IT field for any length of time, you know that words and phrases come and go with regularity. “Hybrid” is one of the words de jour. And, it’s a good word. My only quibble is that those of us who have built integration technology over the last few decades have been using this term for quite some time.

In the modern technology vernacular, “hybrid” is being used in two related ways:

- “Hybrid computing,” or what we used to refer to as “heterogeneous computing,” refers to systems that use more than one kind of processor, operating system, application development platform, and/or run-time platform. Systems constructed in this manner gain performance and/or efficiency by creating a “division of labor” that maximizes the value and contribution of each component.

- In the age of “cloud computing,” it should not be surprising that the term “hybrid cloud” has also emerged. Hybrid cloud is a cloud computing environment that uses a mix of on-premises, private cloud and third-party public cloud services with orchestration between the various platforms. By allowing workloads to move between private and public clouds as computing needs and costs change, hybrid cloud gives businesses greater flexibility and more data deployment options.

Regardless of the definitional specifics, the motivation behind the use of hybrid environments often boils down to “horses for courses” (in idiomatic terms).

Clearly, this hybrid world would not have emerged had it not been for a decade of precursor work that has promoted the continued decoupling of applications, data and platforms. But decoupling was not the only driver in this process. Virtualization has also been occurring in all three areas: apps, data, and platforms. These forces have combined to create the API-centric world that we are now just beginning to exploit.

Many IT professionals are unaware that these same forces, decoupling and virtualization, have also been transforming the IBM mainframe environment. While many still view the IBM mainframe as being synonymous with a monolithic architecture, this is simply not the case.

As an example of decoupling, for over 15 years my company’s customers have been using our high-performance integration solutions to:

- Break apart high-value monolithic applications

- Expose existing business logic as scalable web services

- Build virtual applications comprising mainframe and non-mainframe apps/data

- Do it in a manner that does not require any change to the underlying mainframe applications

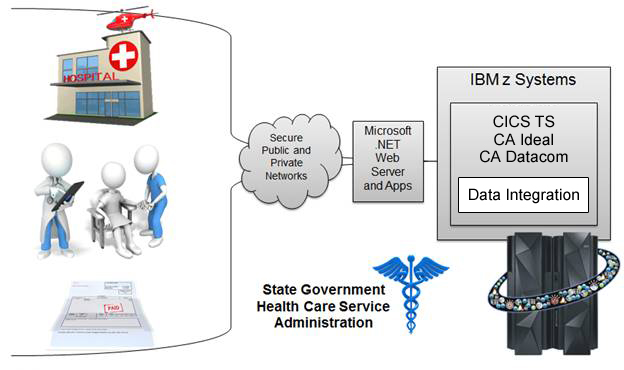

Figure 1 illustrates how one State used this approach to modernize its public health care administration services.

Figure 1. Sample Hybrid Environment using Mobile, Distributed and Mainframe Platforms

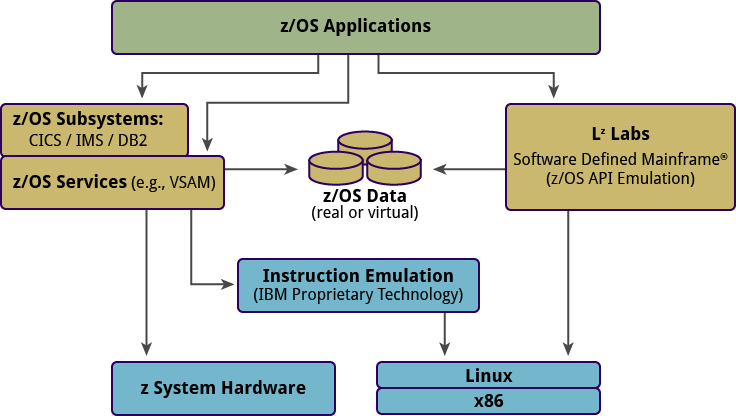

As an example of virtualization, you need look no further than IBM’s own efforts—and more recently those of LzLabs. As illustrated in Figure 2, the execution platform for traditional z/OS applications is continuing to evolve.

Figure 2. IBM Mainframe Virtualization Continues to Evolve

While it might be obvious, I can’t risk not making this point:

✓ The movement toward a hybrid world does not make the mainframe less relevant. In fact, it makes it more relevant.

Why? Because high-value business logic – that runs perfectly well and cost-effectively on the mainframe – can stay on the mainframe.

Isn’t that the point of the hybrid world? Horses for Courses.

But this brings us to a critical question: In a fully decoupled, virtualized, cloud-based and API-centric world, what must you have? The answer:

✓ A robust and flexible integration architecture.

But what does this mean? First and foremost it means that the technology elements of the integration architecture should be as common as possible across the hybrid environment. Stated differently, it should be as “adapter-less” as possible (or the adapters should be as efficient as possible). This is why the foundation of the most effective z/OS integration platform is Server Side JavaScript (SSJS). By standardizing on JavaScript, our customers are able to use the same language to create a:

- Compelling end-user experience

- Powerful cloud-based applications

- Scalable integration layer on the mainframe that enables access to existing business logic—this is also why we ported Redis to run under z/OS, while industry standards and modern technology elements drive our thinking

As can be seen in Figure 3, a high-performance mainframe integration platform has been specifically designed to exploit the exact same architectural elements of the major cloud service providers.

| Cloud Application Platforms | ||||

| AWS | Azure | BlueMix | MF Integration platform | |

| Supports Server Side JavaScript for application and/or integration layer | Yes | Yes | Yes | Yes |

| Optimized for JSON data flows (no data mapping required) | Yes | Yes | Yes | Yes |

| Implements local Redis cache and supports remote Redis access | Yes | Yes | Yes | Yes |

| API-Centric and oriented toward building hybrid applications from micro services | Yes | Yes | Yes | Yes |

Figure 3. Common Attributes of Cloud Platforms and Mainframe Integration

This commonality allows existing mainframe apps/data to participate in a hybrid cloud world with ease.

As mainframe integration specialists, we wholeheartedly embrace the brave “new” world of hybrid IT architectures. But please note: Our customers have been doing this for quite a while. In fact, it was with the “hybrid era” in mind that we mapped out our integration technology platform years ago. A high-performance mainframe integration platform allows large organizations to:

- Break apart their historically monolithic applications

- Expose mission-critical business logic as highly-scalable web services

- Do it using the exact same technology that is revolutionizing the world of cloud, mobile and analytics

And that’s really the point: Using the exact same technology.

For decades, we have observed how poor integration techniques have given mainframe apps a bad rap. In some cases, poor integration has even caused the premature death of valuable mainframe apps. We say: No more.

By embracing the same technologies that are foundational to the hybrid world, high-value applications running on IBM mainframes can find their place in the hybrid world. Every day, our customers attest to the fact that this approach enhances z Systems as the System of Record, decreases the complexity of z Systems integration, and lowers the cost of z Systems ownership.

Russ Teubner is a software entrepreneur and a pioneer in the integration of IBM mainframes with UNIX systems, TCP/IP networks and the web. Prior to co-founding HostBridge, he was the CEO of Teubner & Associates responsible for the development of four product lines and listed on Inc.’s 500 fastest growing private companies three times. Upon its sale to Esker SA, he stayed on for three years before founding HostBridge. Early in his career, Russ graduated from Oklahoma State University with a Bachelor’s in Management Science and Computer Systems and stayed on with the University’s computer center where he developed A-Net, a product allowing mainframes to access DEC applications. In addition to his OSU degree, Russ has participated in executive training programs including Stanford, Harvard and Pennsylvania’s Wharton Business School.

Russ is Chairman of the Board for Southwest Bancorp (NASDAQ: OKSB) and has seen it through tremendous changes. His 16 years at Southwest Bancorp has given him an insider’s view on the data processing needs of financial institutions, invaluable to his work at HostBridge.