In today’s digital economy, data offers tremendous opportunities for harvesting value. Yet extracting insight from real time enterprise transactions can present an elusive goal. Running deep learning models on high volume transactional data is difficult to attain with off-platform based inference solutions, as latency, variability and security concerns can make them impractical in response-time sensitive applications. IBM is addressing this challenge through recent innovations in system and microprocessor design.

Today IBM announced the IBM Telum Processor at HotChips; Telum will be the central processor chip for the next generation IBM Z and LinuxONE systems. Organizations who want help in preventing fraud in real-time, or other use cases, will welcome these new IBM Z innovations designed to deliver in-transaction inference in real time and at scale.

The 7 nm microprocessor is engineered to meet the demands our clients face for gaining AI-based insights from their data without compromising response time for high volume transactional workloads. To help meet these needs, IBM Telum is designed with a new dedicated on-chip accelerator for AI inference, to enable real time AI embedded directly in transactional workloads, alongside improvements for performance, security, and availability:

- The microprocessor contains 8 processor cores, clocked at over 5GHz, with each core supported by a redesigned 32MB private level-2 cache. The level-2 caches interact to form a 256MB virtual Level-3 and 2GB Level-4 cache. Along with improvements to the processor core itself, the 1.5x growth of cache per core over the z15 generation is designed to enable a significant increase in both per-thread performance and total capacity IBM can deliver in the next generation IBM Z system. Telum’s performance improvements are vital for rapid response times in complex transaction systems, especially when augmented with real time AI inference.

- Telum also features significant innovation in security, with transparent encryption of main memory. Telum’s Secure Execution improvements are designed to provide increased performance and usability for Hyper Protected Virtual Servers and trusted execution environments, making Telum an optimal choice for processing sensitive data in Hybrid Cloud architectures.

- The predecessor IBM z15 chip was designed to enable industry-leading seven nines availability for IBM Z and LinuxONE systems. Telum is engineered to further improve upon availability with key innovations including a redesigned 8-channel memory interface capable of tolerating complete channel or DIMM failures and designed to transparently recover data without impact to response time.

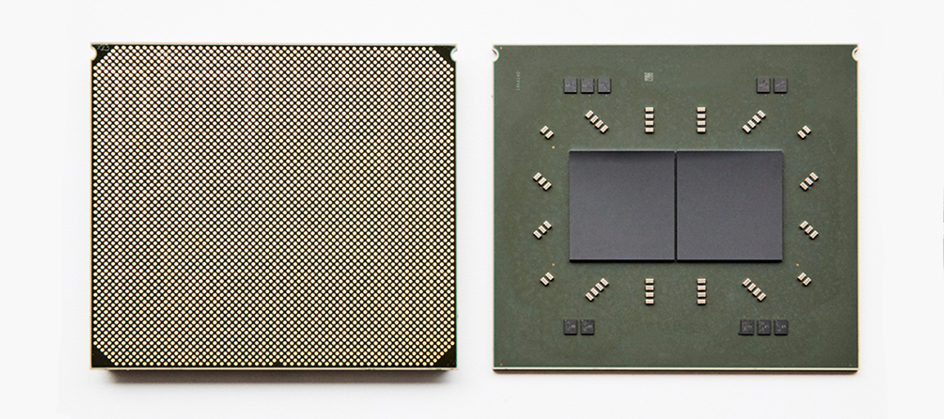

IBM Telum: Next Generation IBM Z Processor Chip

IBM Z processors have a history of embedding purpose-built accelerators, designed to improve performance of common tasks like cryptography, compression, and sorting. Telum adds a new integrated AI accelerator with more than 6 TFLOPs compute capacity per chip. Every core has access to this accelerator and can dynamically leverage the entire compute capacity to minimize inference latency. Due to the centralized accelerator architecture with direct connection to the cache infrastructure, Telum is designed to enable extremely low latency inference for response-time sensitive workloads. With planned system support for up to 200 TFLOPs, the AI acceleration is also designed to scale up to the requirements of the most demanding workloads.

Keeping data on IBM Z offers many latency and data protection advantages. The IBM Telum processor is designed to help clients maximize these benefits, providing low and consistent latency for embedding AI into response time sensitive transactions. This can enable customers to leverage the results of AI inference to better control the outcome of transactions before they complete. For example, leveraging AI for risk mitigation in Clearing & Settlement applications to predict which trades or transactions have high risk exposures and to propose solutions for a more efficient settlement process. A more expedited remediation of questionable transactions can help clients prevent costly consequences and negative business impact.

For instance, one international bank uses AI on IBM Z as part of their credit card authorization process instead of using an off-platform inference solution. As a result, the bank can detect fraud during its credit card transaction authorization processing. For the future, this client is looking to attain sub-millisecond response times, exploiting complex deep learning AI models, while maintaining the critical scale and throughput needed to score up to 100,000 transactions per second, nearly a 10X increase over what they can achieve today. The client wants consistent and reliable inference response times, with low millisecond latency to examine every transaction for fraud. Telum is designed to help meet such challenging requirements, specifically of running combined transactional and AI workloads at scale.

In today’s IT landscape, it’s not just about the data you have, but how you can leverage it for maximum insight. For this reason, knowing how to utilize AI and having the infrastructure ready made to support it has become the new standard in computing. The first step in becoming future ready starts with Telum.

>> Learn more here.

Originally published on the IBM Blogs

[starbox id=95]

[starbox id=96]

Dr. Christian Jacobi is a Distinguished Engineer and Chief Architect for microprocessors at IBM with over 20 years of experience in IBM Z and IBM POWER systems. Christian is highly experienced in micro-architecture, logic design, performance analysis, verification, development and hardware and software co-optimization. Dr. Jacobi was the Chief Architect for the z15 microprocessor and is currently working on next generation IBM Z systems.